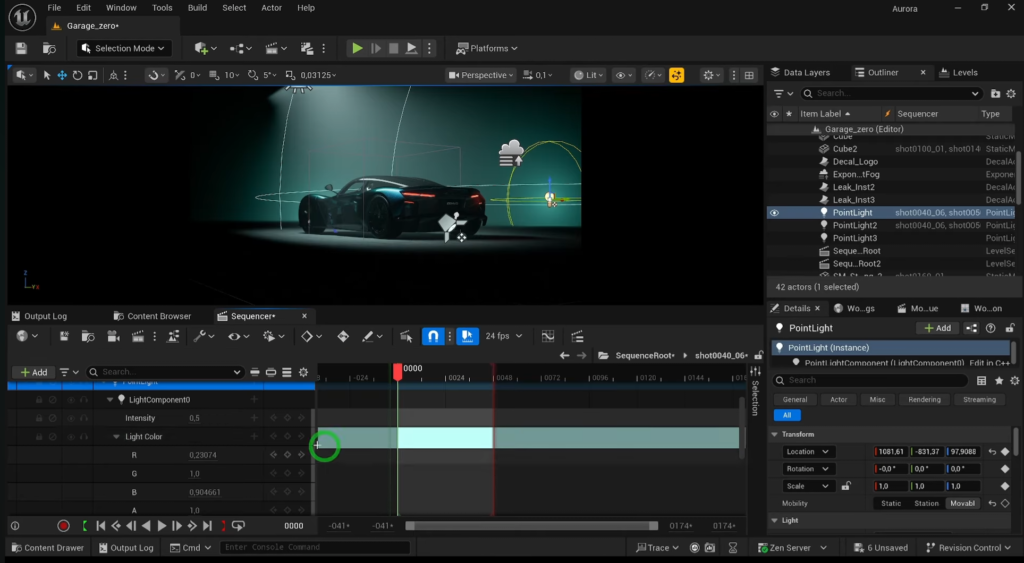

I’m convinced that ComfyUI’s launch of native API nodes back in May of this year signals the end of an era for media generation using locally hosted AI models.

It was around that same time when I was doing my own exploration into what I could do on a consumer grade gaming laptop with 8GB of VRAM. What I found was while it was possible, it didn’t feel worth the wait to get an occasional gem that was viable enough for use in a project.

My next phase of AI media generation research shifted to using Veo 3, which generates videos much faster, yet the results still weren’t worth the credits wasted on generations that didn’t meet my quality requirements.

This month, I saw that ComfyUI announced native support for Seedream 4.0. I wasn’t aware of the new API nodes, or Seedream until that article. That meant I immediately wanted to know the model’s VRAM requirements, which ended up being a whopping 59GB!

Even an NVIDIA RTX 6000 Ada graphics card at $7k is only going to get you 48GB of VRAM.

It seems pointless to buy something that will be outdated buy the time you pay it off, even with 12 months financing at $580 per month.

At that point, you might as well budget for buying enough AI credits and let the services handle the hardware and maintenance for you.

I’m finding that I use free services, like Whisk, to generate images on a regular basis so I don’t have to store stable diffusion models on my machine and wait 10 minutes for results. I can iterate quickly and navigate the 88% trash generations easier using the Google Lab’s infrastructure. This article’s image was a good example of that, which took 6 images to spark an idea and 20 more images to get to one I was happy with. That took minutes using my phone vs hours to manage locally on my laptop.

While I’m sad to see this happening, I understand why. Models that generate media need more resources. While smaller LLMs can run just fine on a local consumer grade machine, you still have to balance the weights and sacrifice some level of quality.

I’m planning to share more about that as I move towards exploring locally supported AI capabilities with Home Assistant.

Being someone who aims to promote free and open-source solutions as much as possible, I’m not sure when I’ll have the desire to pay for an AI media generation service. I only hung onto Google’s until the free trial ended, which was mainly due to more garbage being produced than gold.

I guess we’ll have to keep an eye out for quality to improve and costs of running the models to go down to make it truly worthwhile.