I’ve been learning how to build interactive virtual environments in Unreal Engine 5 with a focus on understanding how to control lighting with DMX. Now, it’s time to explore using TouchDesigner to convert live audio from my Hercules Inpulse 500 DJ Controller into DMX data that is sent to UE5 to create audio-reactive virtual light shows.

Phase One: UE5 DMX Project Template

Below is a video showing the results of me wrapping my head around how DMX works starting with the UE5 DMX template.

From a lighting design perspective, this is garbage!

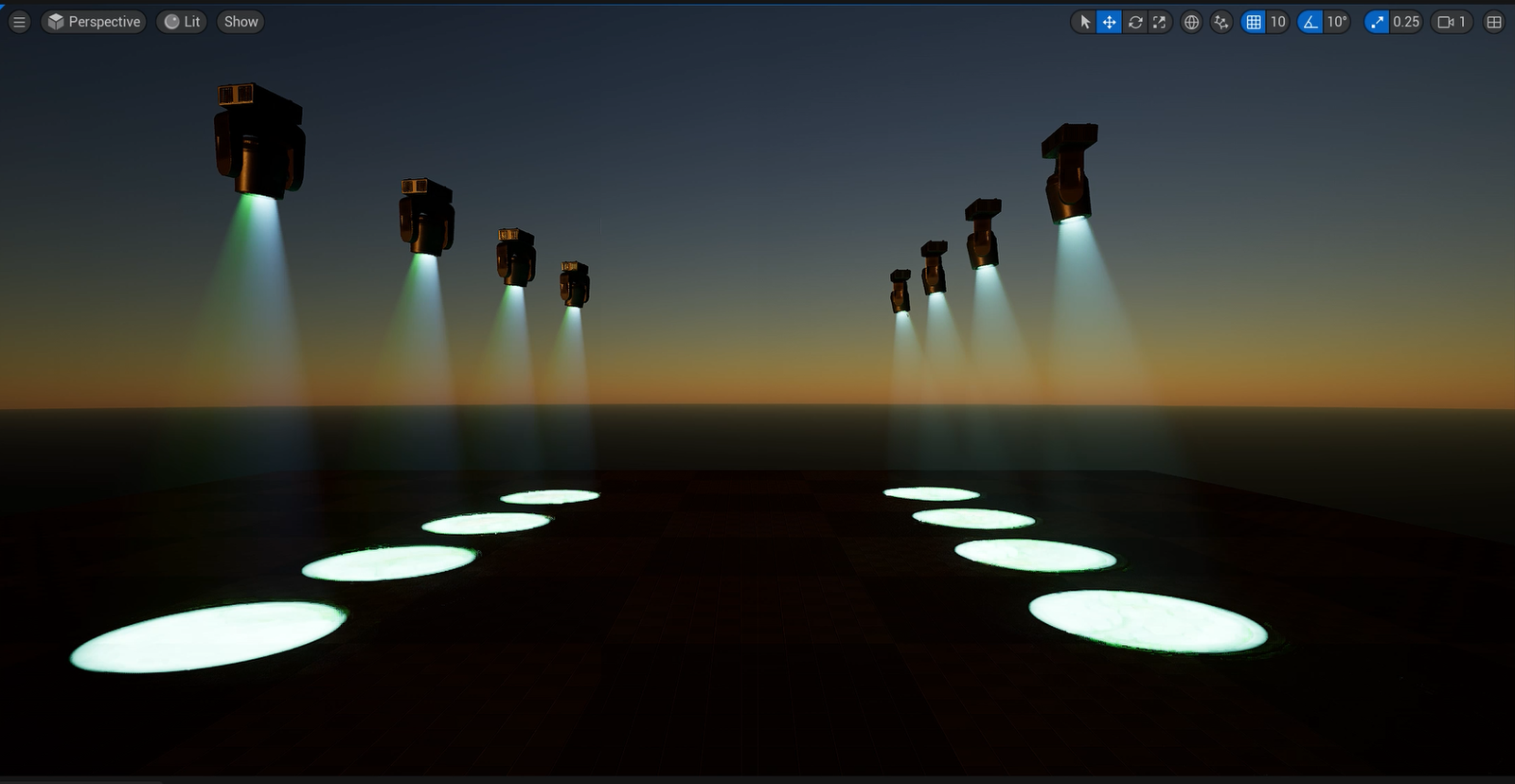

From a technical perspective, this helped me understand how to create groups of fixtures with similar control parameters and animate them using a classic timeline with key frames. I also gained an understanding of how UE5 sends and receives DMX data and how it provides a virtual control panel thanks to plugins.

In the lower left/center portion of this video you can see MyMovingHead_000 group’s control parameters and thier associated animation key frames.

In the lower right you can see the DMX output data for each channel in the Activity Monitor.

With what I’ve learned, if I purchase a USB to DMX interface, I should be able to control lighting in the real world using the output data above – as long as my lights have the same control configuration (pan, tilt, zoom, etc).

I should also be able use that USB to DMX interface with a physical DMX controller to feed data into UE5 to control the lighting inside my virtual world!

I’ll worry about spending money on hardware to run real world experiments another day!

Controllers

I’m not interested in completely replicating a DMX controller’s features. UE5 handles most of that with the DMX Control Console plugin. I’m also not worried about capturing the exact features in a real world light, since I’m still thinking through which one I’d most likely purchase. I’ll wait to replicate the one I buy.

My ultimate goal is to focus on capturing live audio input from my DJ controller, and leverage TouchDesigner’s ability to react to the music to dynamically generate DMX data. I will then send that data into UE5 to control my virtual lights using the Art-Net protocol. Again, that same data should be able to control lights in the real world at the exact same time!

From what I’ve learned, not all physical DMX controllers have a built-in mic for audio reactivity. This one does, which I plan on buying unless TouchDesigner provides me with everything I need. It also has DMX input and output ports, along with Midi which could be useful for future projects.

I’ve also learned that not everyone is a fan of audio-reactive lighting, but I’m interested in seeing how it works when I’m in control of how the lights respond to music mixed with my Hercules Inpulse 500 DJ controller, which is something I already own.

I’ve seen high-end DJ controllers that built in DMX light show controls, but I’m not a professional DJ willing to spend $2,500 for an all-in-one solution. Besides, that would be like driving a car without understanding what’s happening under the hood. Where’s the fun in that?

Here’s a picture of my current hardware. I have a ton of fun mixing music with this thing and I’m eager to see if I can work lighting into the party 🥳

My hope is that TouchDesigner will let me set up my DMX data in a useful way that will allow me to create a dynamic light show that I won’t have to control manually. My goal is to focus on the music and let the software manage the lights. I’m certainly not opposed to hitting a button to fire off a motion sequence that I know will work well with the music. I may aim for something like that at some point – especially if I know what music I’ll be playing ahead of time.

Examples

Audio-Reactive Art

To give you a sense of what I’m thinking, here’s an artist who does some crazy things with Unreal Engine and dynamic audio. He’s using Ableton with a midi controller to do this whole thing live, so it is slightly different. I eventually plan to add other dynamic virtual visuals beyond lighting, but I also have an interest in controlling something in reality too, and lights would be much easier than exploding particle spheres 😂

If you do watch this, at least give it a full minute to get going!

Here’s a tutorial for creating an audio reactive particle cloud that I plan to explore further to help me improve my overall understanding of Touch Designer, but it also seemed like a cool video to add for show casing what you can do:

Real-Time DMX Special Effects Light Control

One other example I felt was worth sharing was how you can use DMX in video production where lights in the real world match what’s going on in a virtual world. For example, if you have a virtual explosion happen with real actors that respond as if they were in the scene, you can have studio lighting match the virtual lights to cast the glow of the blast on your actors!

This video walks through how that works using two studios located in different countries:

Phase Two: DMX TouchDesigner Tutorial

After considerable digging, I ended up using this next video as my main guide. It gave me just enough clues hidden within differences in versions used, even if only a year had passed since the video was made.

That’s a main reason why I don’t like to create step-by-step tutorials. Details change way to fast, making the process of “following along” super frustrating. I knew I wouldn’t need all of this, but that’s typically how I learn…taking tidbits of info from many sources and using what I’m most interested in.

At this phase, my goal will be to keep things simple by using an audio file as input like this project does.

Fixture setup

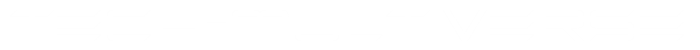

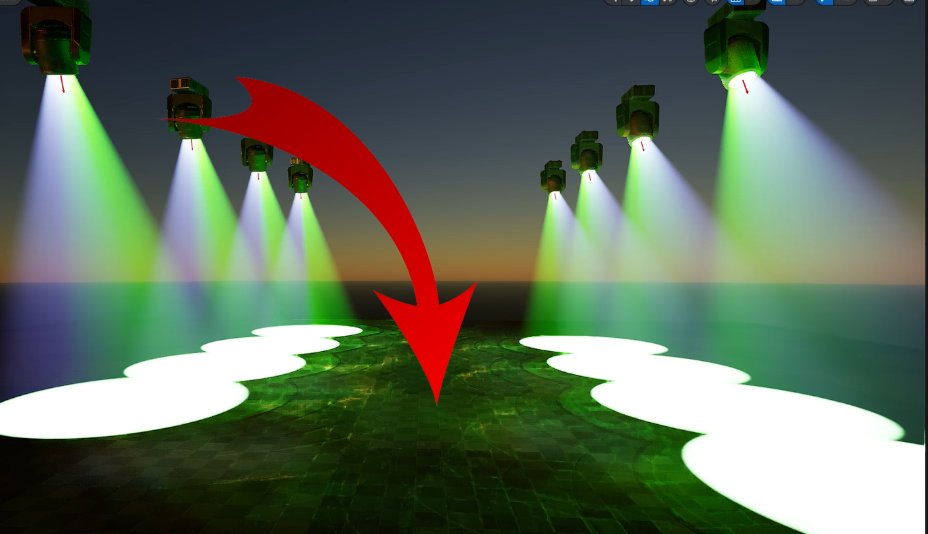

I began by setting up a new basic level in the DMX template, where I dug into the Engine content to get the DMX lighting fixture, Moving Head, and setup five of them up in my scene like the video.

I also grabbed a laser, since lasers a cool and it was included in the tutorial!

With my lights in place, I created my own DMX Library.

In that, I created my fixtures for the moving heads and laser with their associated parameters as functions.

Next, I patched my fixtures to open channels within my DMX Universe. I’ll need these in TouchDesigner later!

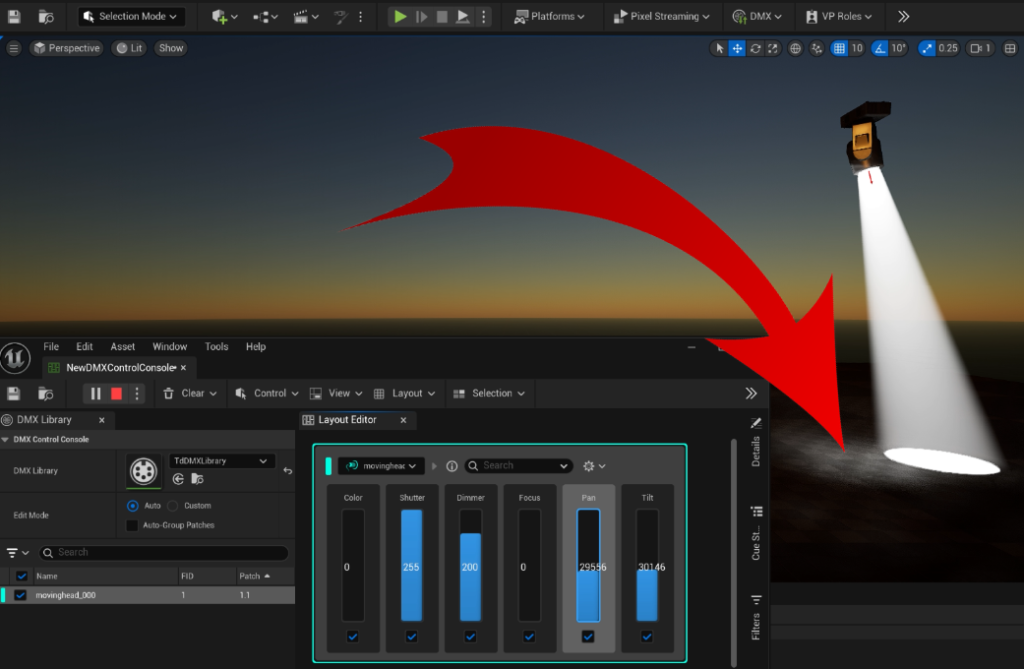

To make sure everything worked, I set up the DMX Controller Console and verified I could control my lights after mapping each of the models in my scene to their appropriate fixture patches in my DMX Library.

I also created the camera actor to help control what’s displayed on screen.

With my scene’s environment lighting dimmed, my lights ready to go, and my project’s DMX network settings set up to listen for data, I’m ready to move into TouchDesigner!

TouchDesigner

Once you get used to the nuances of using TouchDesigner’s UI, this part was actually straightforward!

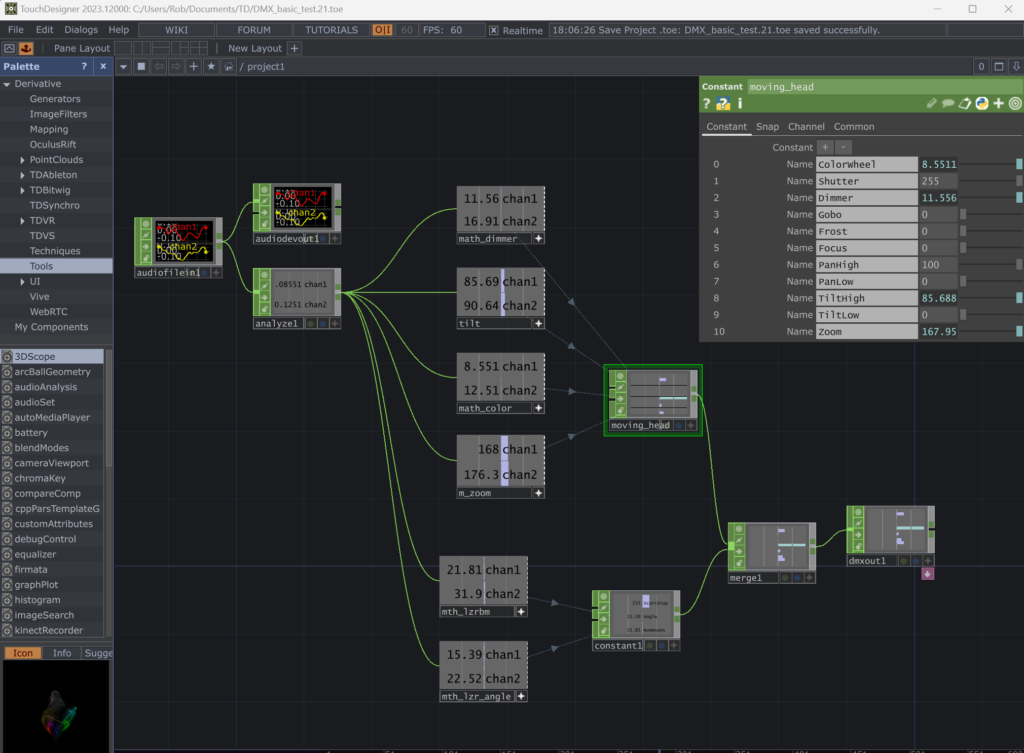

Here’s the final network I ended up with for this phase of the project, which uses an audio file as input.

To describe this, I’ll work backwards from the DMX Output CHOP on the far right, which uses the Art-Net protocol and sets the network address to localhost to match what UE5 is listening on.

I’ve set up Constant CHOPs with the same control parameters as the fixtures in UE5 and merged them into the DMX Output CHOP.

Next, I used a several Math CHOPs to create some variation coming out of the audio’s Analyzer CHOP, which I was able to map to the channels I wanted to control.

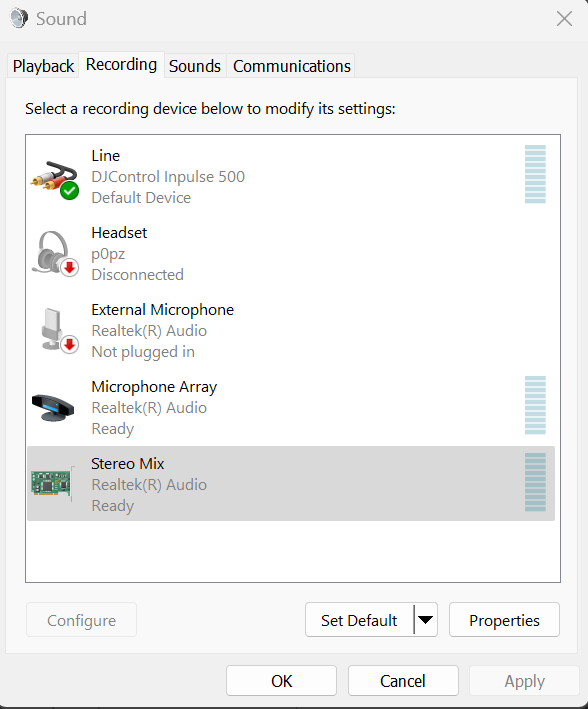

I was able to setup the Audio Input CHOP in a variety of ways, including using my microphone, but that wasn’t impressive at all. While exploring, I saw ASIO options in there, which meant I should be able to connect my Hercules Inpulse 500 controller as input!

I actually struggled with getting my DJ audio to work as the input, until I realized that I could use the Realtek Stereo Mix input, which was disabled for some reason. Once I enabled that and used it as the input for TouchDesigner, I was finally able to access the audio I was driving with my DJ controller!!

Phase Three: Starting from Scratch!

With everything I’ve learned up to this point, it’s time to formally document all the details in a YouTube video tutorial for my future self, and anyone else who’s exploring this!

Here’s the video I recorded where I started from an empty UE5.5.0 project and walked all the way through the process until I was controlling my custom lighting with my DJ Controller:

Future Exploration

Having finished all that, I still have some areas I’d be interested in learning more about.

Rendering

I noticed that my virtual lights were lagging a bit, leaving artifacts that I would almost describe as “trails” on the ground. There was also considerable noise when there was a lot going on.

My assumed cause is that I haven’t upgraded my laptop’s RAM yet. I’m running 3 hefty pieces of software at once on 16GB of RAM, where UE5 was the only app running in the DMX Template Phase where the lights looked nice and clean. I also seemed to notice the noise getting worse when running Djuced, which is further evidence of RAM being a potential solution.

I’ll update my RAM and run this again to see if that helps, otherwise I’ll look for differences between UE 5.5 and 5.4’s rendering settings.

16 Bit (Two Channel) Pan & Tilt

I need to gain a better understanding of why it was suggested to set up pan and tilt with 16 bit (two channels). Especially since they didn’t seem to make any difference when changing the values in TouchDesigner.

Audio Analysis

I’d like to gain a better understanding of how audio is being analyzed in TouchDesigner. I could see extreme power in being able to target specific frequencies and sending those signals to patches that map to lighting that responds directly to those frequencies. For example deep base hits being tied to strobe lights, or higher frequencies being mapped to laser patterns.

Optimize Mapping of DMX Values to Movement

With a better understanding of the audio analysis, I would expect to be able to improve my ability to define patterns within the movement of the lights. For example, having the lights spin and rotate up when the audio drops out was something I found cool, but having that happen with more control would be very useful in a light show.

Control Physical Lights

At some point I’d like to spend the money on some physical lights.

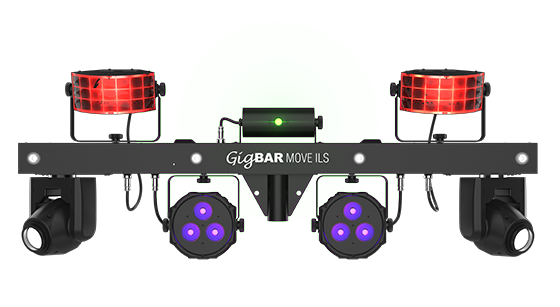

I’ve had my eye on the CHAUVET DJ GigBar Move 5-in-1 Lighting System, which includes 2 moving heads like the fixtures I used in this project plus several others that can be controlled with DMX!

With all the included lights, tripod, and case, it’s a great all-in-one solution for a beginner DJ.

Project Files

To make life easier, I’ve saved both of my Unreal Engine and TouchDesigner project files on GitHub.

Conclusion

I knew when I started exploring the idea of controlling lights in a virtual world, and the real world, was quite ambitious, but I feel good about my overall progress even though my current light design in this project is pretty weak.

With what I’ve learned, I know I could come up with something super cool if I put in the effort to design an environment and get lights dialed in to respond how I want them to, based on the music I’m playing.

At least now I know I can come back and review everything I’ve learned and get back up to speed when I’m ready to add physical lights to this project.

Reflection

It’s almost funny to look back on this past year to see where I was last December, when I was doing some of my first exploration in building virtual worlds using Roblox. The following video is a result of me playing with the Roblox Studio’s Concert Template and is a HUGE sign that I have a clear interest in concert lighting.

Progression

I can say that I’m glad that I graduated from Roblox to Unreal Engine, with Unity exploration in between. While Roblox was a fun way to get started, I lost interest once I learned they had no interest in supporting USD, which I believe is the future of 3D and XR.

We’ll have to see where 2025 takes me and my exploration into building virtual worlds. All I know for certain is that I plan to explore USD quite a bit.

Thanks for following my journey!