I’ve been working to improve my skills in a variety of areas and have realized that it’s time for me to switch to using ComfyUI to run geneative AI models and elevate my overall progress.

I’m a project-based learner, so I kick-off projects to help me learn new skills and think through new processes and workflows. My most recent project of analyzing UFO/UAP evidence is mainly intended to help me improve my skills in video editing with Davinci Resolve. In order to improve the overall quality of my videos, I felt a need to generate fun and engaging multimedia elements to add a little extra spice to my online presence.

I decided to use that as my excuse to learn ComfyUI.

The most powerful open source node-based application for creating images, videos, and audio with GenAI.

I have several irons in the fire already, so I’ve been putting off switching from Automatic1111 to avoid adding to my list of things I need to learn.

With further research, I was shocked to learn all the things I could do with ComfyUI! I found it aligns perfectly with my current and future plans for GenAI.

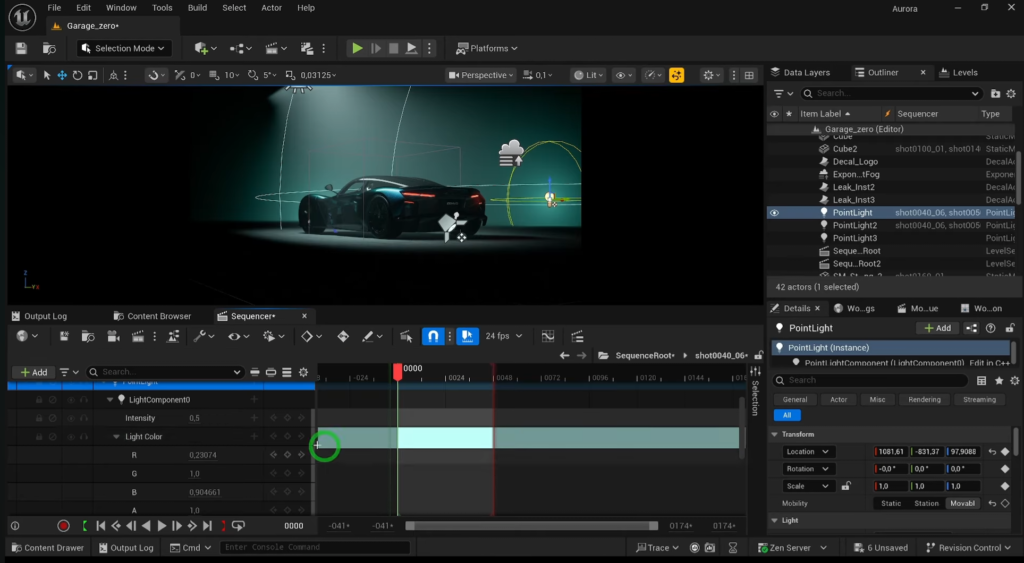

You can even create AI generated 3D models and audio-reactive videos, which could be GREAT to further my exploration into Creating Amazing Audio-Reactive Light Shows in UE5 with DMX and TouchDesigner by incorporating dancing characters and video into virtual environments! I should even be able to use AI generated music to drive the whole thing. 🎵

Videos!

B-rolls and animations really make a difference in producing quality content, so I’m excited to explore what ComfyUI can do in this category.

I’m always a sucker for adorable animal characters, so I had to share this example.

3D Models

ComfyUI generates models that can be loaded into Blender, too! I’ve been slowly building experience in Blender, but I still have a long way to go.

My gooal is to speed up the creative process by having AI handle the modeling process that I haven’t fully developed yet.

Is USD supported?

According to GPT:

ComfyUI has been utilized in workflows involving synthetic datasets, such as those in NVIDIA’s Omniverse, which employs USD.

I already planned on exploring USD this year, so I may as well do some further digging into what’s possible.

Audio

Background music for my videos is something I believe adds that “spice” I’ve been looking for.

Here’s is a great example of the quality I’ve been hoping to meet with AI Generated audio!

I’ve been evaluating different DAWS and want to checkout LMMS for making my own audio. Making music can be fun, but it takes time! Whether or not it’s good is another story.

Since ComfyUI can generate midi files, I’m wondering if I can use LMMS to mix different clips to speed up the process.

Images (of course)

Of Course ComfyUI supports image generation using Stable Diffusion and other models!

Another big reason for me switching is that Automatic1111 doesn’t offer support for Stable Diffusion 3.5, which I’m eager to try out.

Heres a simple workflow in ComfyUI to do basic latent upscaling.

I’ve worked as a professional illustrator before, but I wasn’t fast enough at it to make it a lucrative career.

I’ve learned using AI to generate images with prompts is a skill in itself. With the right model, prompts and settings, it can be a huge time saver!

Installation

I’ve decided to take easy route and use the pre-built installer to get me up and running quickly.

At some point, I may try the manual installation approach, but only once I’m ready to contribute to their code. Which will be a while!

Security is top of mind at ComfyUI, so they’ve been taking preventive measures to reduce the risk of you downloading malicious nodes. You can learn more in their 2025 Security Update.

Getting Started

Here’s a video by Scott Detweiler from Stability AI showing how to get started and explaining why they use ComfyUI instead of Automatic1111.

I can already see some huge benefits in being able to quickly explore different settings by generating previews and not having to save them until you find the right combination to achieve your vision.

Here’s an example of a workflow I created that allows me to run Stable Diffusion 3.5 with three different KSampler settings and two size variations at once, which is something you could never do in Automatic1111! It’s a spaghettified mess, but SUPER COOL!

Tips:

There were some great tips mentioned in the above “Getting Started” video, but I’ll also keep a running list of resources that I find useful here.

Running Stable Diffusion 3.5

To avoid this error when running SDXL 3.5:

Be sure to follow this recommendation on GitHub to add the text encoders. You’ll need to download the encoders mentioned in this example and adjust the workflow use the TripleCLIPLoader node to process the prompts. Here’s a visual of how it should look when you’re done:

General

The article 6 essential ComfyUI tips every beginner should know is my fist one, since it mentions the new Civitai Green (SFW) for safe-for-work model searches. I personally don’t consider it SFW, but it’s nice that they’re trying.

[More will be added as I find them]

Conclusion

Early into my exploration of using GenAI for video and audio, it’s becoming clear that I’ll need to study up on describing scenes and sounds using industry standard terms. That will help me master prompt engineering to achieve quality results!

Keep an eye on this blog and my YouTube channel to see how I end up using ComfyUI in different projects.

I’m currently working on Episode #2 of my UFO/UAP technical evaluation series and taking extra time to focus on improving the overall quality.

Hopefully ComfyUI will help me create more engaging multimedia elements that help me take my overall online presence to the next level.