In this article, I’ll be using Docker Compose to spin up an Apollo server to host GraphQL. Plus, Docker will also build a PostgreSQL database and automatically set it up with a users table and connect GraphQL to it so you can work with your data right away!

I wanted to set up a simple development environment that allowed me to mimic a production instance of Apollo GraphQL connected to PostgreSQL. I love using Docker to automate processes, so I decided to set this up and share it in case anyone else would benefit.

GraphQL

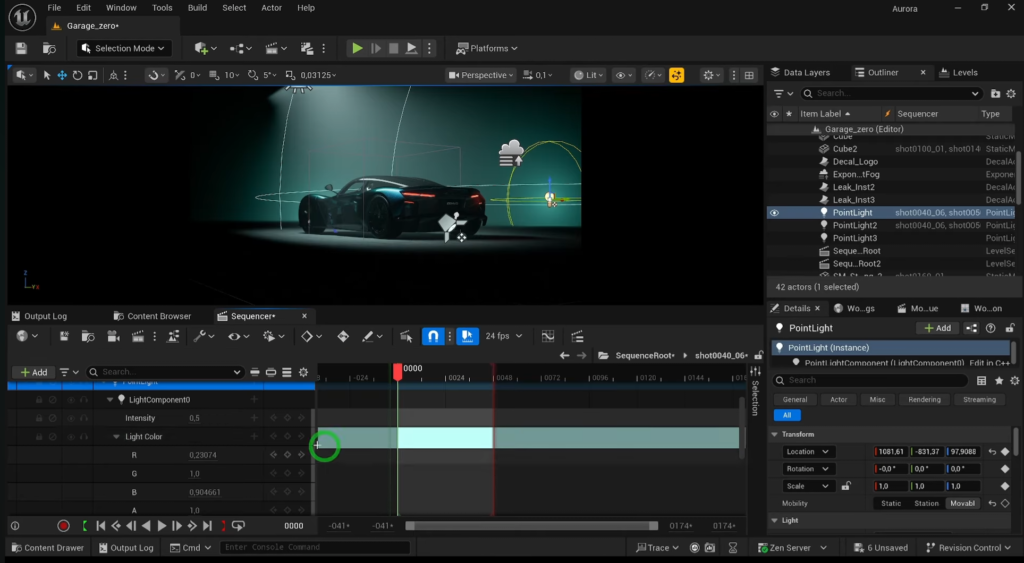

Here’s the final result showing GraphQL in Apollo Sandbox running the “hello world” query to get the current time from PostgreSQL:

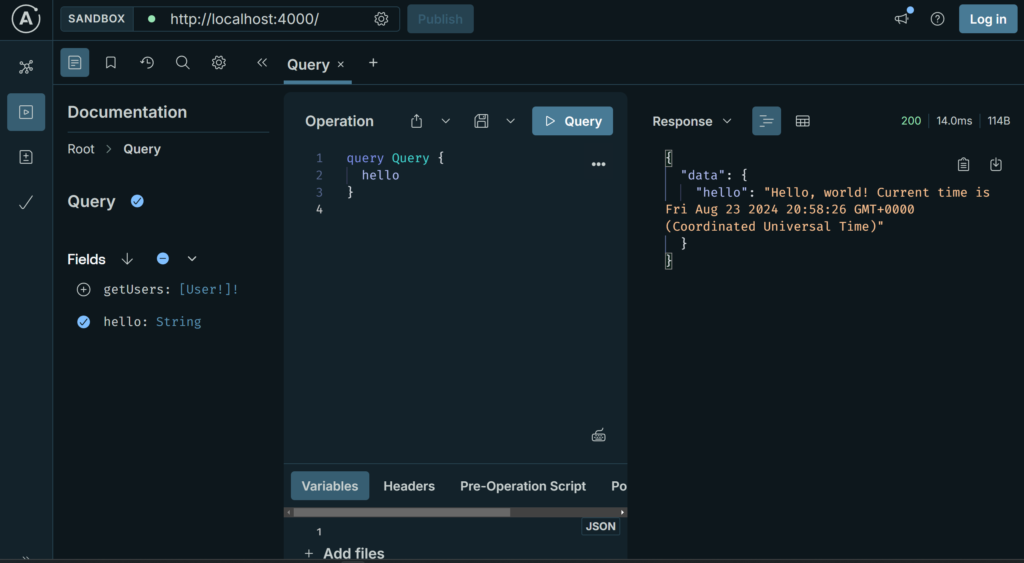

Here’s an example Mutation that adds a user’s data to the database:

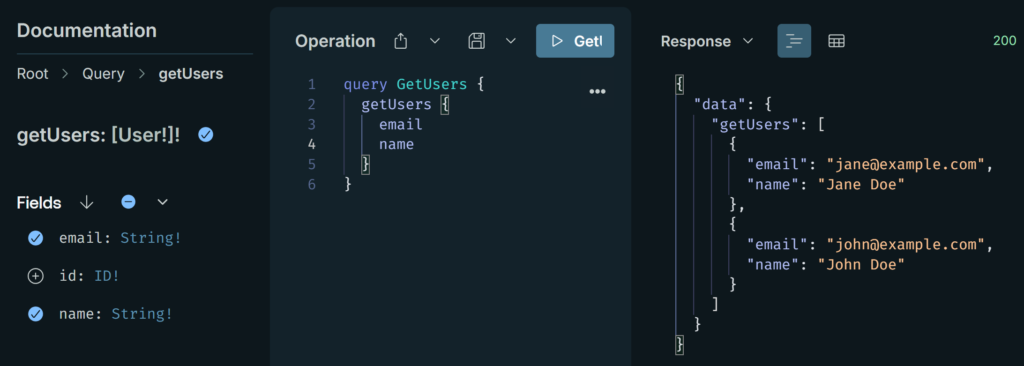

Finally, here’s the query to get all the users:

Docker

Let’s take a look at how Docker is set up!

version: '3.8'

services:

apollo-server:

build: .

container_name: apollo-server

environment:

- NODE_ENV=development

- PORT=4000

- POSTGRES_HOST=postgres

- POSTGRES_PORT=5432

- POSTGRES_USER=admin

- POSTGRES_PASSWORD=admin

- POSTGRES_DB=mydatabase

ports:

- "4000:4000"

depends_on:

- postgres

postgres:

image: postgres:15-alpine

container_name: postgres

environment:

POSTGRES_USER: admin

POSTGRES_PASSWORD: admin

POSTGRES_DB: mydatabase

volumes:

- postgres-data:/var/lib/postgresql/data

ports:

- "5432:5432"

volumes:

postgres-data:In the above, we’ve set up 2 services apollo-server and postgres, with all the credentials. Postgres is using the Linux Alpine image, with volumes to ensure we store the data locally until we delete the image.

The Apollo server needs a bit more configuration, so we’re setting the build context to the same directory (.) where the following Dockerfile will be read in.

# Use the Node.js 18-alpine base image

FROM node:18-alpine

# Install PostgreSQL client tools to get pg_isready

RUN apk add --no-cache postgresql-client

# Set the working directory inside the container

WORKDIR /app

# Copy the entire project into the container's working directory

COPY . .

# Give execution permission to the init-db.sh script

RUN chmod +x ./init-db.sh

# Install dependencies and start the Apollo Server

CMD ["sh", "-c", "./init-db.sh"]We use the Node Alpine image to run the server.

Once that’s running we need to install the PostgreSQL client tools so we can use pg_isready to know we’re ready to load our data.

We set up a working directory and copy all our files into our Docker image. One of those files is our shell script init-db.sh to initiate the database, so we need to make sure to use chmod to update the permissions to allow Docker to run it. Finally we set that up as the script to be run once everything is ready.

The Data

From here, I’ll only cover the details for getting the data set up. I’m sharing links to each file in the repo, incase you want to dig further.

Here’s the portion of the init-db.sh that uses psql to run our SQL to create the users table:

# Run the SQL command to create the users table

psql -h "$POSTGRES_HOST" -U "$POSTGRES_USER" -d "$POSTGRES_DB" -c "

CREATE TABLE IF NOT EXISTS users (

id SERIAL PRIMARY KEY,

name VARCHAR(100),

email VARCHAR(100)

);"Here’s where we define the GraphQL type definitions for the data we’re working with, which helps GraphQL automatically build documentation for our schema.

type Query {

hello: String

getUsers: [User!]!

}

type User {

id: ID!

name: String!

email: String!

}

type Mutation {

addUser(name: String!, email: String!): User!

}Finally, we’re going to use JavaScript to create a connection pool to interact with the database.

In this file, we also need to define our resolvers to define our queries and mutations to read and write to the database using SQL.

const { Pool } = require('pg');

const pool = new Pool({

host: process.env.POSTGRES_HOST,

port: process.env.POSTGRES_PORT,

user: process.env.POSTGRES_USER,

password: process.env.POSTGRES_PASSWORD,

database: process.env.POSTGRES_DB,

});

const resolvers = {

Query: {

hello: async () => {

const client = await pool.connect();

try {

const result = await client.query('SELECT NOW()');

return `Hello, world! Current time is ${result.rows[0].now}`;

} finally {

client.release();

}

},

getUsers: async () => {

const client = await pool.connect();

try {

const res = await client.query('SELECT id, name, email FROM users');

return res.rows;

} finally {

client.release();

}

},

},

Mutation: {

addUser: async (_, { name, email }) => {

const client = await pool.connect();

try {

const res = await client.query(

'INSERT INTO users (name, email) VALUES ($1, $2) RETURNING *',

[name, email]

);

return res.rows[0];

} finally {

client.release();

}

},

},

};

module.exports = resolvers;

Conclusion

You can access the full code with instructions to get this up an running here on GitHub!

I found a few somewhat similar solutions on the web, but none of them used Apollo to serve GraphQL. Also none of them had this much automation, so I figured I’d share this project on my blog in case it comes in handy for anyone else.

I’m still learning how to use GraphQL. From what I’ve dabbled in over the years, it’s a powerful API tool! If nothing else, this could be a fun way to get to know Docker, GraphQL, and Postgres all in one nice little package.

Enjoy!