Four days ago, researchers at Rutgers University’s AGI Research Team officially launched their AIOS GitHub repo, making their project open-source! This is extremely exciting to me, since an LLM driven operation system would not only improve the performance and efficiency of LLM agents, but providing access to peripheral devices unlocks unlimited potential.

… AIOS, an LLM agent operating system, which embeds large language model into operating systems (OS) as the brain of the OS, enabling an operating system “with soul” — an important step towards AGI.

AGI Research

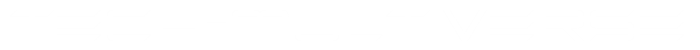

Architecture of AIOS

I immediately fell in love with this project and it’s straightforward implementation! 🥰

I’m so glad that I finally got my Tech-Multiverse GitHub account set up so I could fork their repo and start sharing what I learn! I may even be able to contribute to this project, since it ties directly into some other AI exploration I’ve been doing, but haven’t written about yet. Stay tuned to learn more about that!

LLM Kernel

In their white paper they introduce their proposed LLM-specific kernel to overcome challenges where completing real-world tasks require both LLM level and OS level resources and functions.

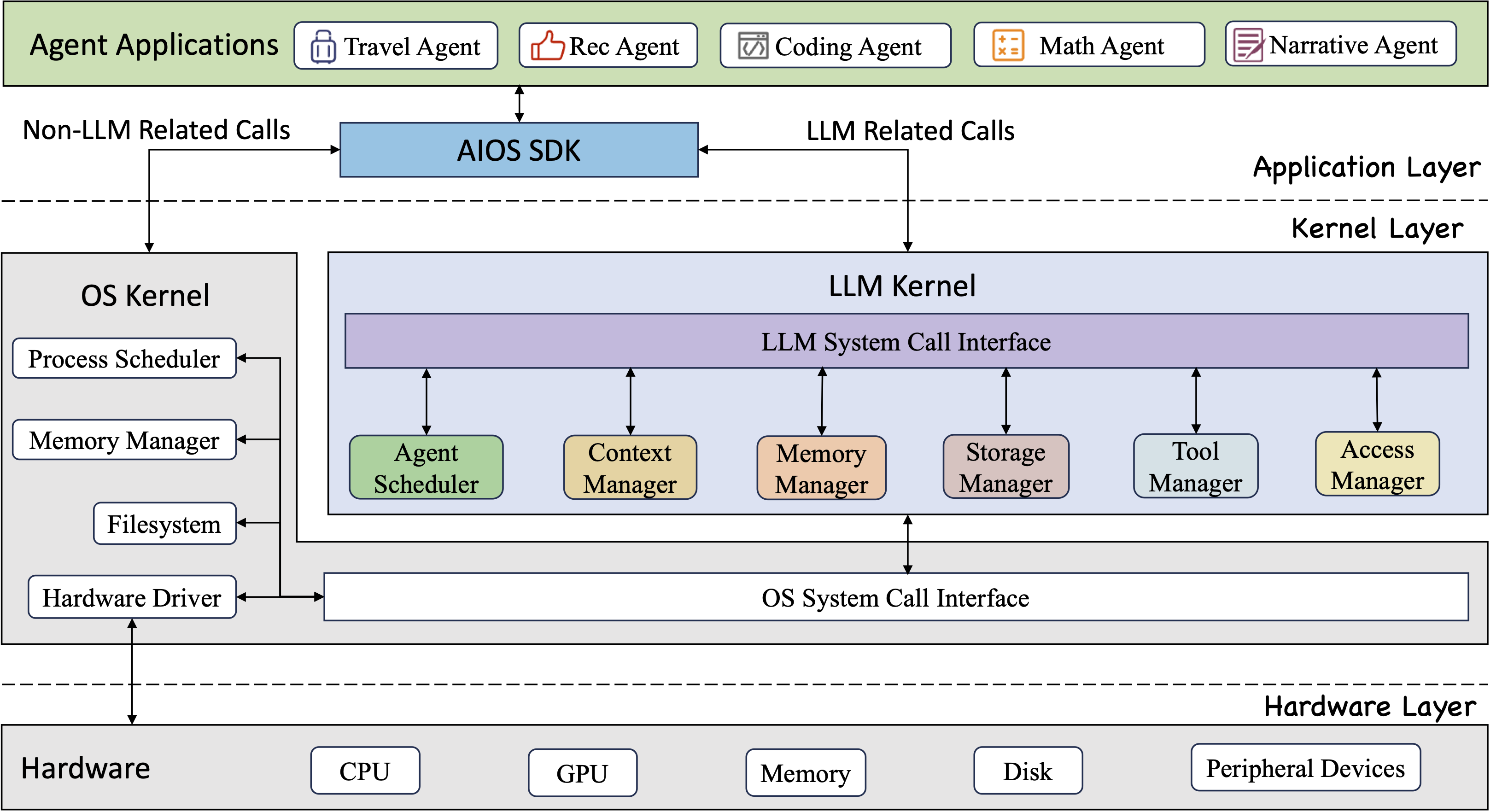

Here’s the Travel Agent example they use to help explain this concept:

Under the Hood

Let’s dig into the code to understand what I love most about this work!

They start off with a “BaseAgent” class, which you can use to build custom agent driven applications – the top layer of the architecture diagram.

The BaseAgent has various methods which utilizes prompt engineering to direct the LLM on what actions to take. For example the “check_tool_use” instructs the LLM to make a decision around using the available tools, like a search engine. Here’s the Python function where you can see how the prompt is structured:

def check_tool_use(self, prompt, tool_info, temperature=0.):

prompt = f'You are allowed to use the following tools: \n\n```{tool_info}```\n\n' \

f'Do you think the response ```{prompt}``` calls any tool?\n' \

f'Only answer "Yes" or "No".'

while True:

response = self.get_response(prompt, temperature)

temperature += .5

print(f'Tool use check: {response}')

if 'yes' in response.lower():

return True

if 'no' in response.lower():

return False

print(f'Temperature: {temperature}')

if temperature > 2:

break

print('No valid format output when calling "Tool use check".')

With that, the LLM will respond with a simple “Yes” or “No”. If “Yes”, we return True.

Their example Travel Agent class gets implemented with a list of tools that it’s allowed to use. So, when the LLM returns True (above) we can see that the Travel Agent is given the go-ahead to use the “google_search” tool:

class TravelAgent(BaseAgent):

def __init__(self, agent_name, task_input, llm, agent_process_queue):

BaseAgent.__init__(self, agent_name, task_input, llm, agent_process_queue)

self.tool_list = {

"google_search": GoogleSearch(),

}

...All that is represented by the “LLM System Call Interface” portion of the architecture diagram.

It looks like storage and memory will work in a similar way, but that’s clearly a work in progress considering they pass on each of the following DBStorage class methods:

class DBStorage(BaseStorage):

def __init__(self):

pass

def sto_save(self, agent_id, content):

pass

def sto_load(self, agent_id):

pass

def sto_alloc(self, agent_id):

pass

def sto_clear(self, agent_id):

passWelcome to My Brain!

What’s funny about this article is that I was literally sitting down to ask a ChatGpt what plants it would recommend to survive living on my porch year round. Opening my phone, I saw an article about this project and got so excited about what I was learning that I had to write about it immediately!

I’ve only cloned the repo locally and haven’t gotten it up and running yet. My guess is this won’t be the last time I write about this, especially since it ties into another article I’m currently working on. Be sure to subscribe to get new articles by email if you’re interested in following my journey!

Header Image

This article’s header wasn’t generated with Stable Diffusion, but was provided by the AGI Research Team’s AIOS repo.